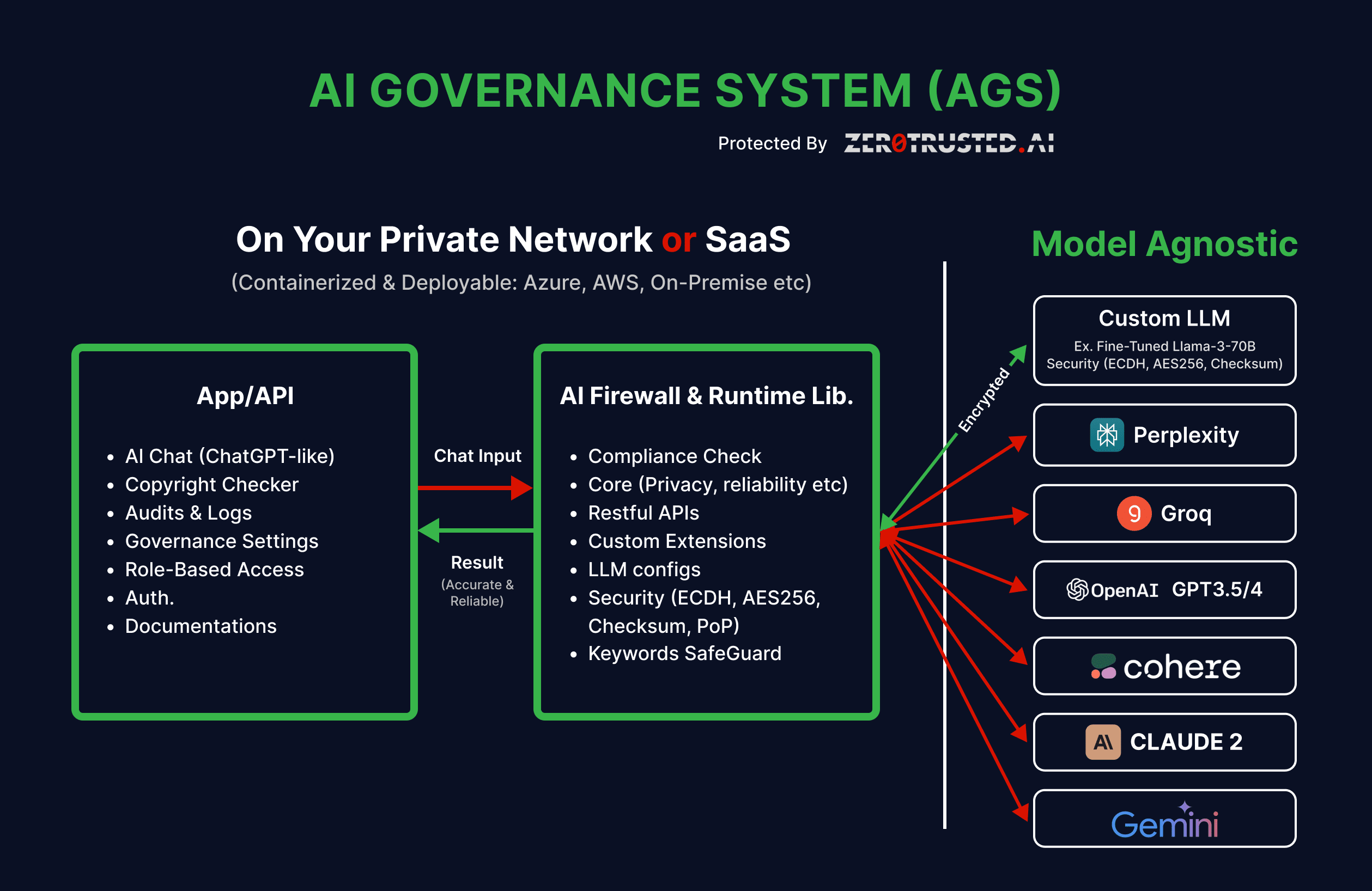

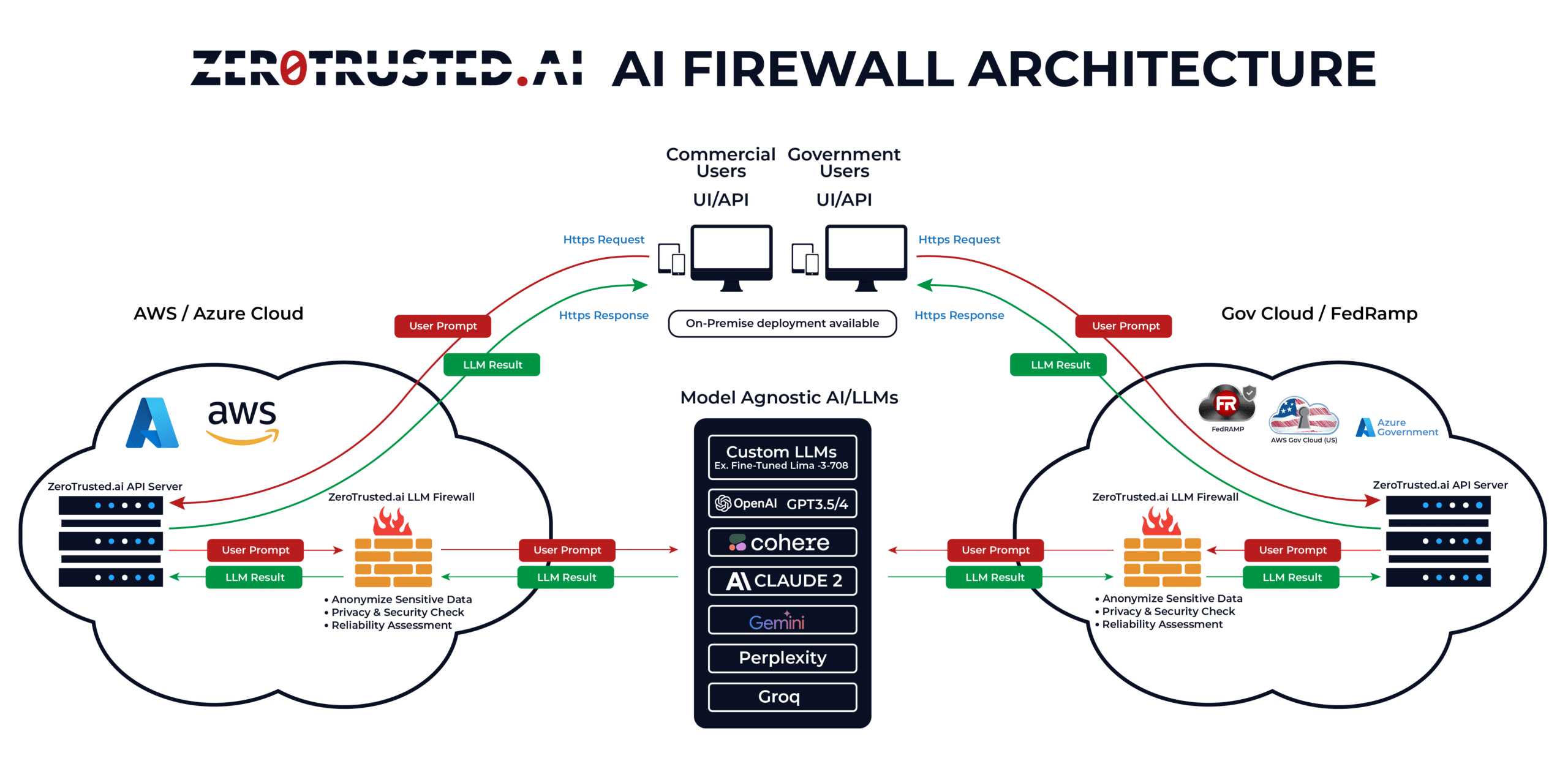

ZeroTrusted.ai’s AI Governance System (AGS) is a next-generation platform designed to help organizations bring order, oversight, and control to the rapidly growing use of AI. From Large Language Models (LLMs) and AI Agents to embedded tools like copilots and chatbots, AGS ensures that every interaction aligns with your security, privacy, and compliance requirements.

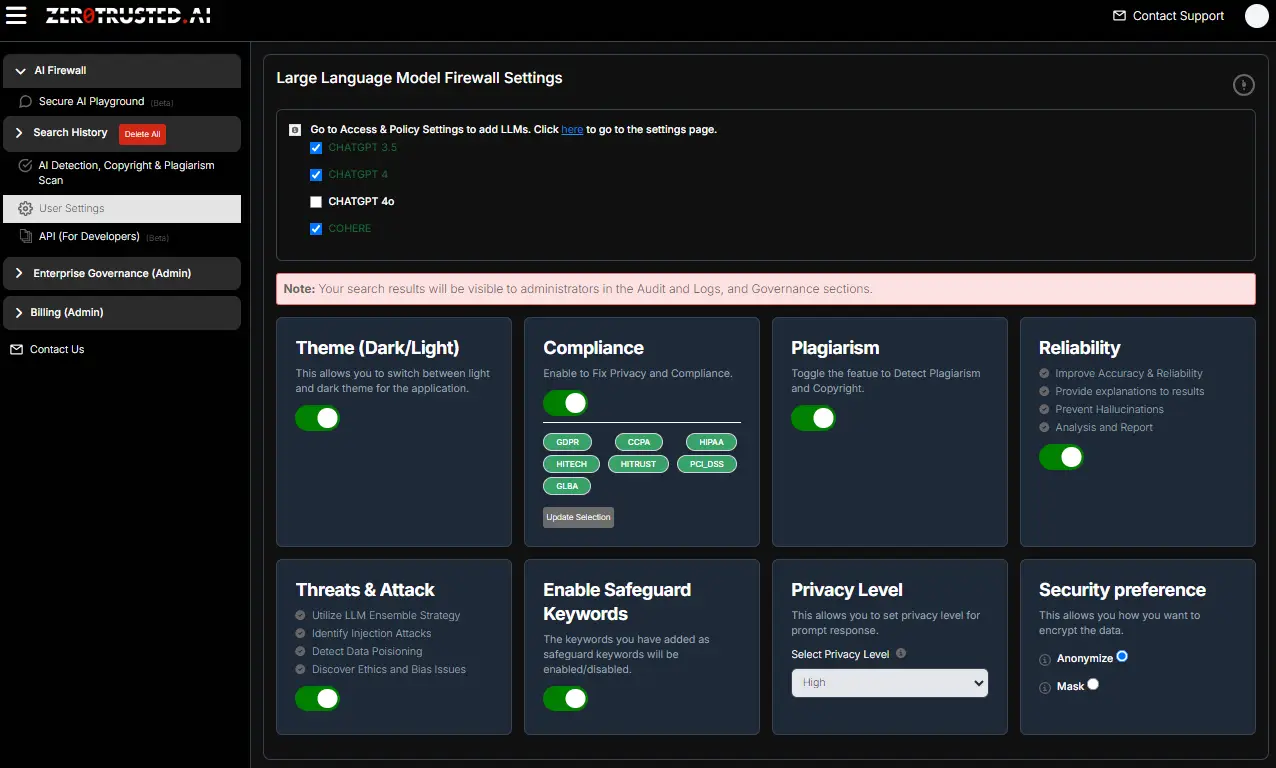

Available as a SaaS platform or deployable in your private environment, AGS integrates seamlessly with enterprise policies while being powered by ZeroTrusted.ai’s LLM Firewall. This allows real-time monitoring of AI activity, policy enforcement, and automatic blocking of sensitive or non-compliant interactions before they happen.

With centralized governance, risk visibility, and full compatibility across Azure, Google Cloud, or AWS, AGS gives enterprises the ability to securely adopt AI at scale—without losing control.

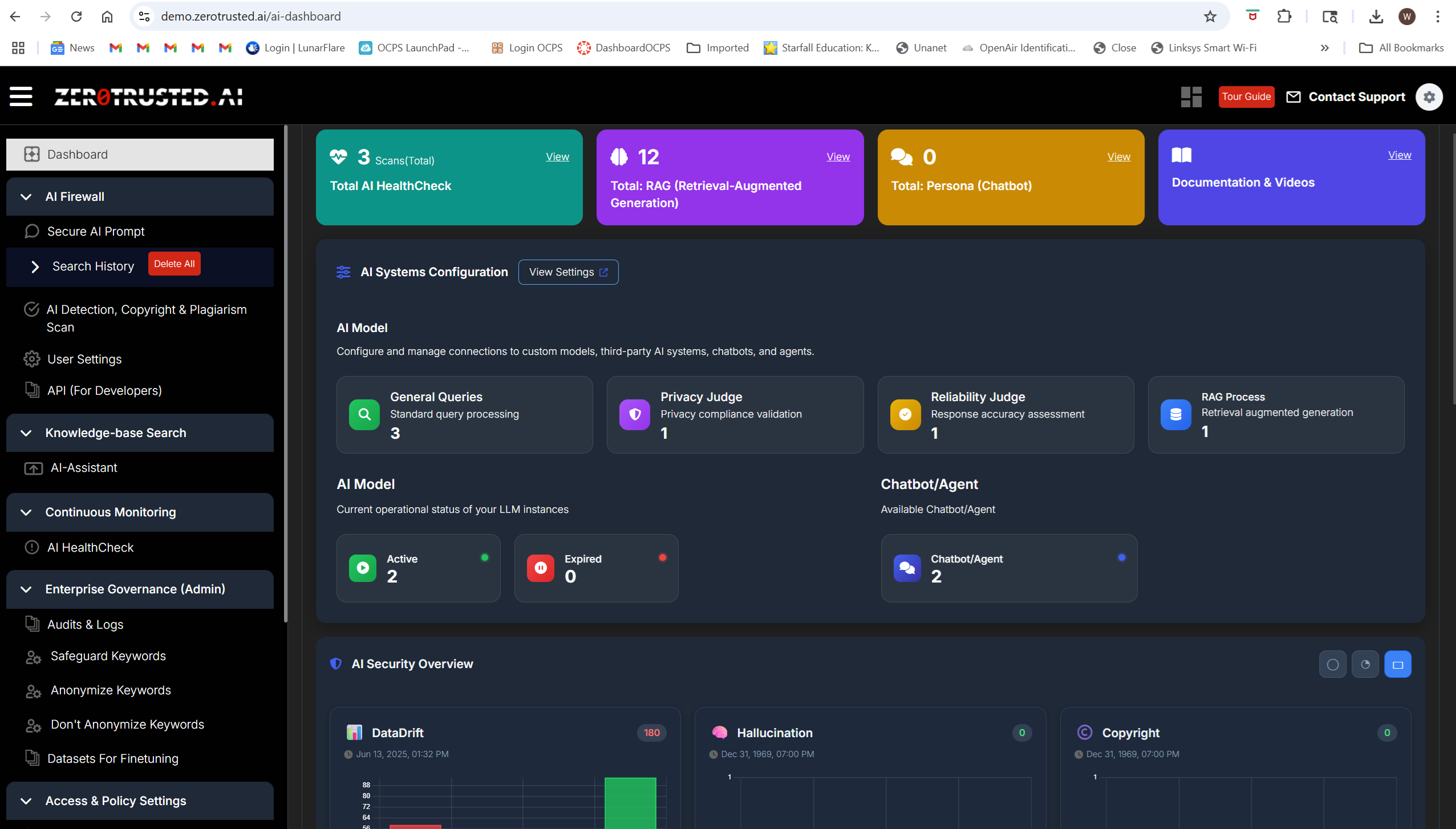

ZeroTrusted.ai’s newest feature is the AI HealthCheck.

AI HealthCheck continuously monitors AI systems – including LLMs, SLMs, and Vector Databases – for security, reliability, and privacy issues.

This real-time monitoring provides your team with ongoing insights into the health and safety of your AI systems, allowing for proactive management and swift responses to any emerging threats.

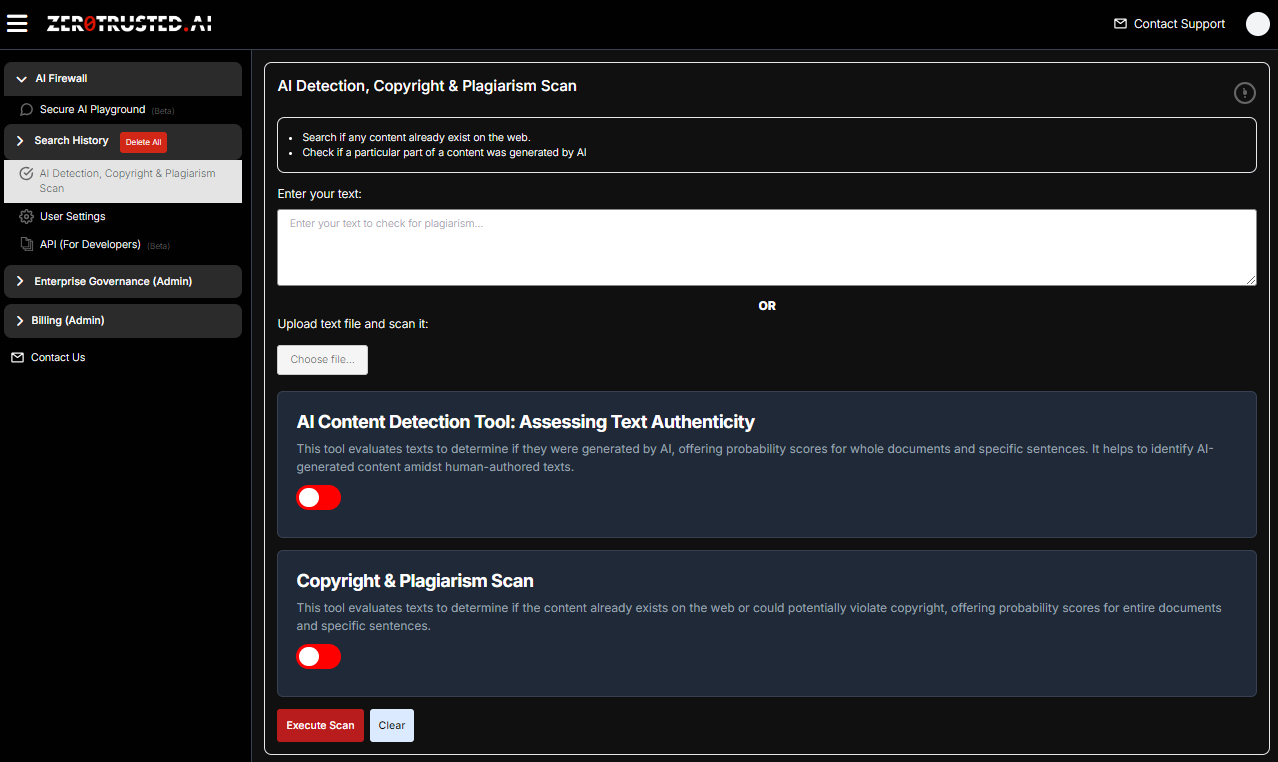

Our platform proactively detects potential copyright infringements and plagiarism in AI-generated content, preserving the integrity of your work.

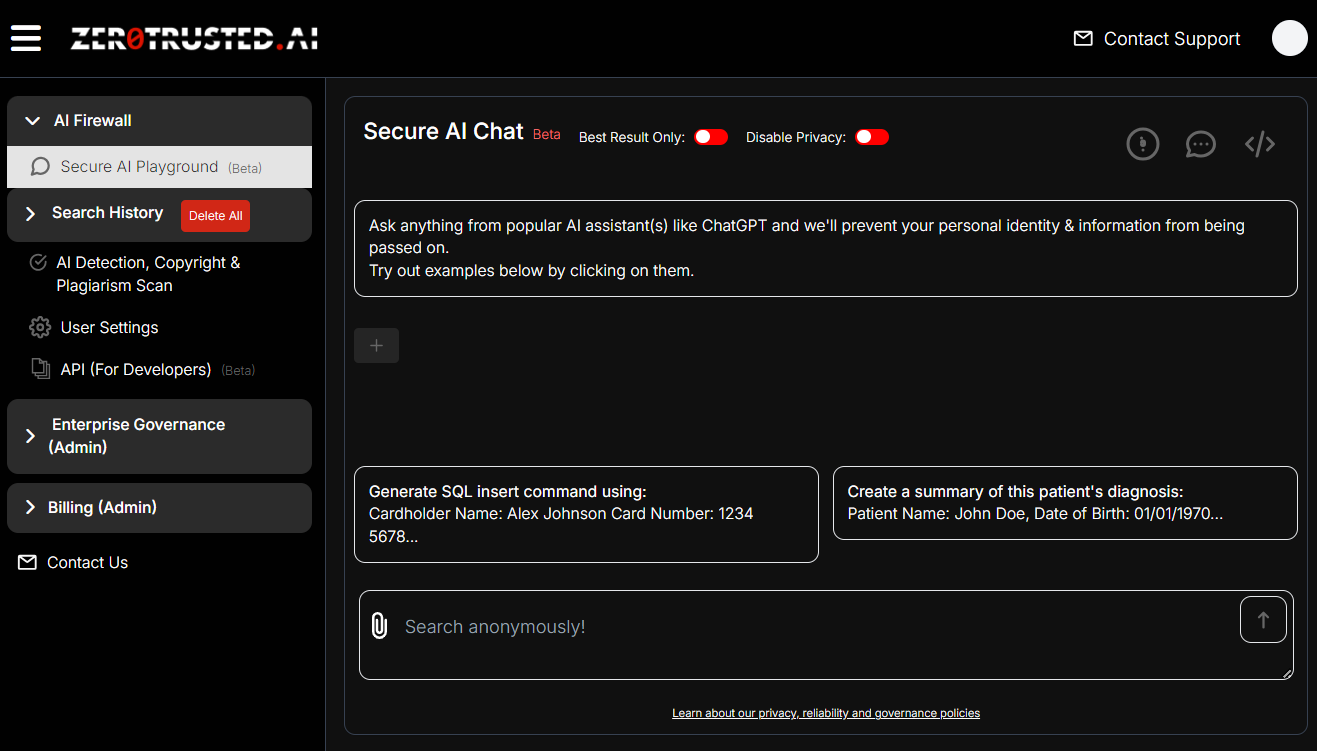

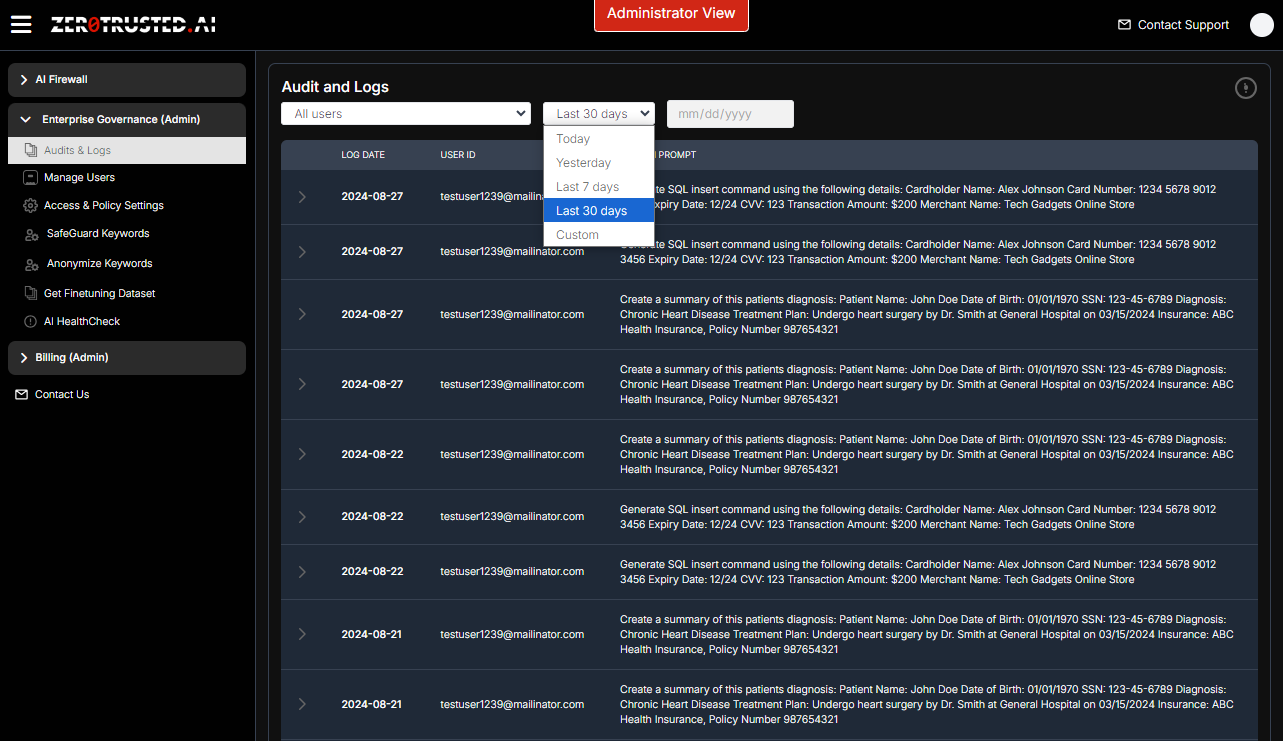

Gain full visibility into what your organization sends to—and receives from—LLMs, AI Agents, AI Gateways, and integrated systems. ZeroTrusted.ai continuously monitors AI activity, capturing detailed audit logs with full chain-of-custody tracking for users, agents, protocols, and tools. Export directly to your SIEM/SOAR or leverage our built-in AI Judge to identify and assess issues related to security, privacy, reliability, and AI ethics. Our integrated anomaly analysis engine detects data drift, AI alignment failures, insider threats—including rogue agent behavior—and other high-risk events in real time, ensuring transparency and control across your entire AI operation.

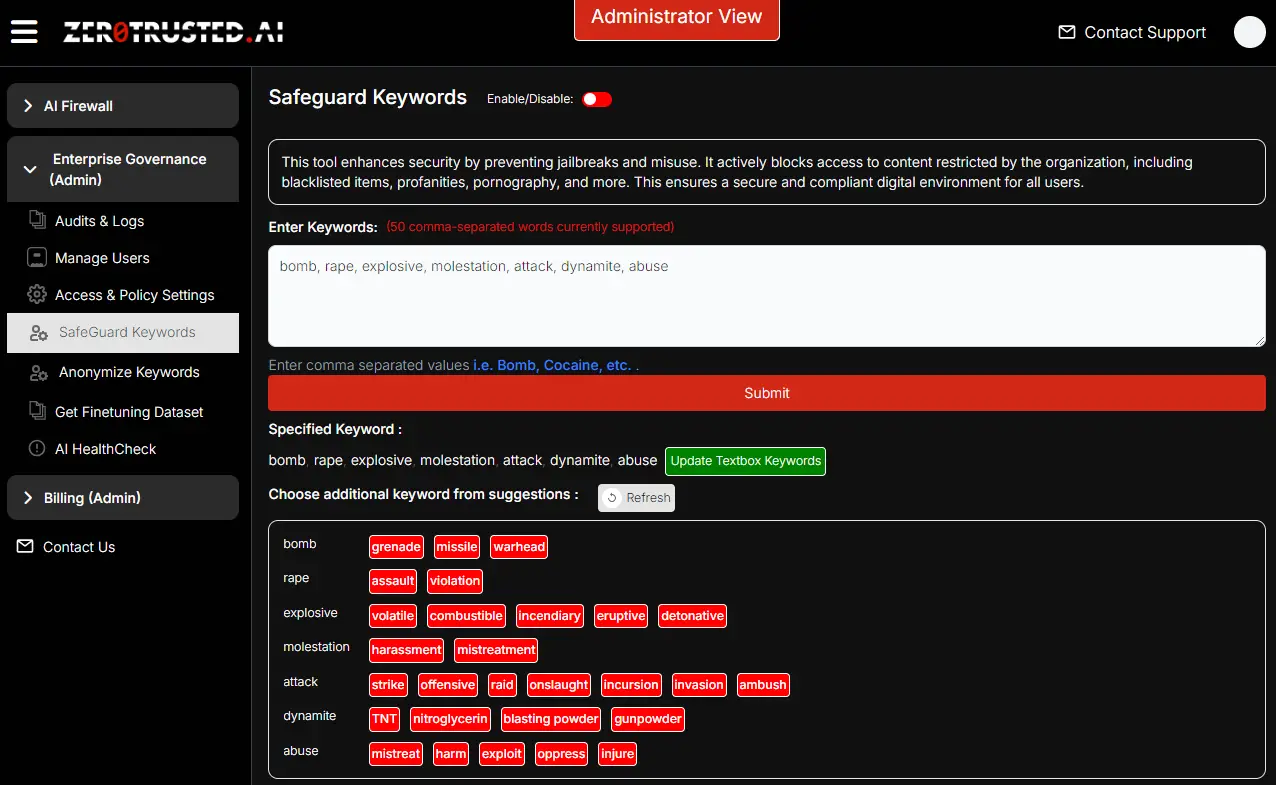

Safeguard your sensitive data by building custom keyword lists to block or anonymize critical terms—ensuring proprietary IP, project names, or regulated phrases are never fed into AI without authorization. ZeroTrusted.ai’s AI Judge enhances this protection by identifying and blocking similar or aggregated terms that could reveal sensitive information over time, helping you stay secure even as language evolves.

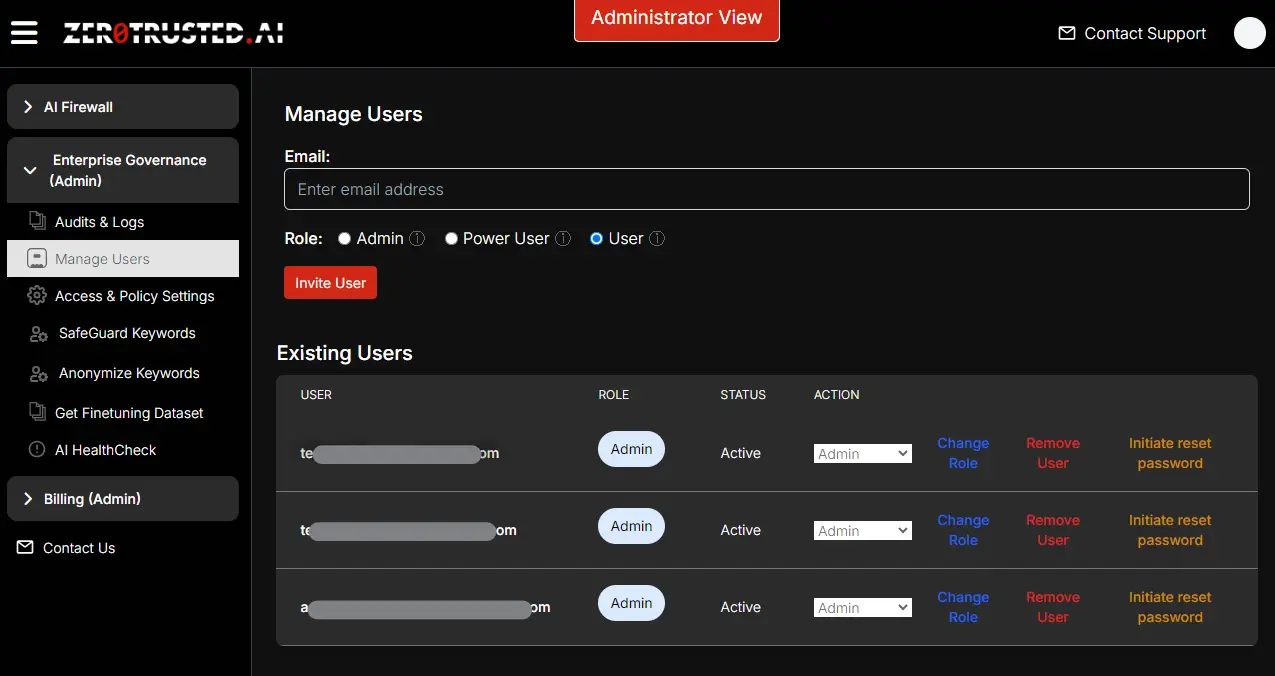

Manage user access with ease—bring your own Identity and Access Management (IDAM) system or use ours. ZeroTrusted.ai enables granular control over which employees can access specific AI models, agents, and tools, ensuring the right people have the right access at the right time.

FEATURES | Description | ZeroTrusted.ai's AGS | OpenAI Teams |

Team Users | |||

Multi Users | Multiple users can be added to one plan | Unlimted | 13 users |

Privacy | |||

Compliance Violation Detection & Prevention | Ensures all compliance violations are detection and prevention | ||

Anonymize Prompts | Maintain prompt anonymity when utilizing LLMs to ensure privacy. | ||

Keyword SafeGuard | Blocking custom words to prevent jailbreaks | ||

Streaming Data and Synchronous | Option to stream data for latency or synchronously to mitigate streaming data attack | ||

Disable History | Feature allowing user to disable history being saved | ||

No Data Harvesting | Prompt data can be used for training LLM | ||

Governance | |||

Compliance Violation Detection & Prevention | Monitor all compliance violations are detection and prevention across user base or enterprise wide | ||

Anonymize Prompts | Configure and enforce prompt anonymity when utilizing LLMs to ensure privacy across user base or enterprise wide | ||

Keyword SafeGuard | Configure and enforce blocking custom words to prevent jailbreaks across user base or enterprise wide | ||

Streaming Data and Synchronous | Option to stream data for latency or synchronously to mitigate streaming data attack | ||

Disable History | Enable or disable history being saved across user base or enterprise wide | ||

No Data Harvesting | Prompt data can never be used for training LLM | ||

LLMs | |||

Number of LLM Providers | Supports multiple 3rd party LLMs such as Claude, Cohere, PaLM, GPT-3.5, GPT-4, as well as custom LLMs such as the Llama series | 7 LLM providers | 1 LLM Provider |

Decentralization & Distribution | Use of multiple LLMs provide the option to choose LLM independent entities, rather than being controlled by a single organization | 5 LLMs (Parallel usage) | 1 LLM (Parallel usage) |

LLM Ensemble Strategy | Use of multiple LLMs to produce varied responses, and then ranking them to select the best | 5 LLMs (Parallel usage) | 1 LLM (Parallel usage) |

Analysis and Reporting | Number of monthly tokens per user | 100,000 tokens | 1,000,000 tokens |

API Access | |||

Access to API | Access to API | ||

User Interface | ChatGPT like interface to query LLMs | ||

Deployment | |||

On-Premise or Cloud | Deploy the entire AGS stack (UI, APIs, runtime library, etc.) in your own environment | ||

Integrations | |||

LangChain Integrations | Access to ZeroTrusted.ai's LangChain tools | ||

Zapier Integrations | Access to ZeroTrusted.ai's Zapier Zaps | ||

Azure Cloud Integrations | Access to ZeroTrusted.ai's app on Azure Apps Marketplace | ||

Improved Accuracy | |||

Improve Accuracy & Reliability | Enhance accuracy by optimizing prompts and validating results. | ||

Provide explanations to results | Explains how and why the result were selected | ||

Prevent Hallucinations | Verify that LLM outputs are not fabricated | ||

Analysis and Reporting | Prompt search analysis and report | ||

Threats & Attack | |||

Identify Injection Attacks | Safeguard against malicious inputs designed to exploit model vulnerabilities | ||

Detect Data Poisoning | Detect poisoned results by identifying outliers found using multiple results generated by LLM ensemble | ||

Discover Ethics and Bias Issues | Detect ethical violations and biases in results | ||

Prevent Jailbreaks | Prevent jailbreaks through custom rule based logic that allow companies to flag/block given keywords | ||

Security & Data Privacy | |||

ztPolicyServer - Secure Gateway as a Service | Secure Gateway as a Service | ||

ztDataPrivacy - End to End Encryption (E2EE) | Check for instances of compliance breaches and sensitive data such as Personally Identifiable Information (PII), Payment Card Industry (PCI) data, etc. Rectify any issues by appropriately sanitizing the data. | ||